A break from Node.js, Let's talk about AI Security Pitfalls

LLMs and GenAI Security Pitfalls

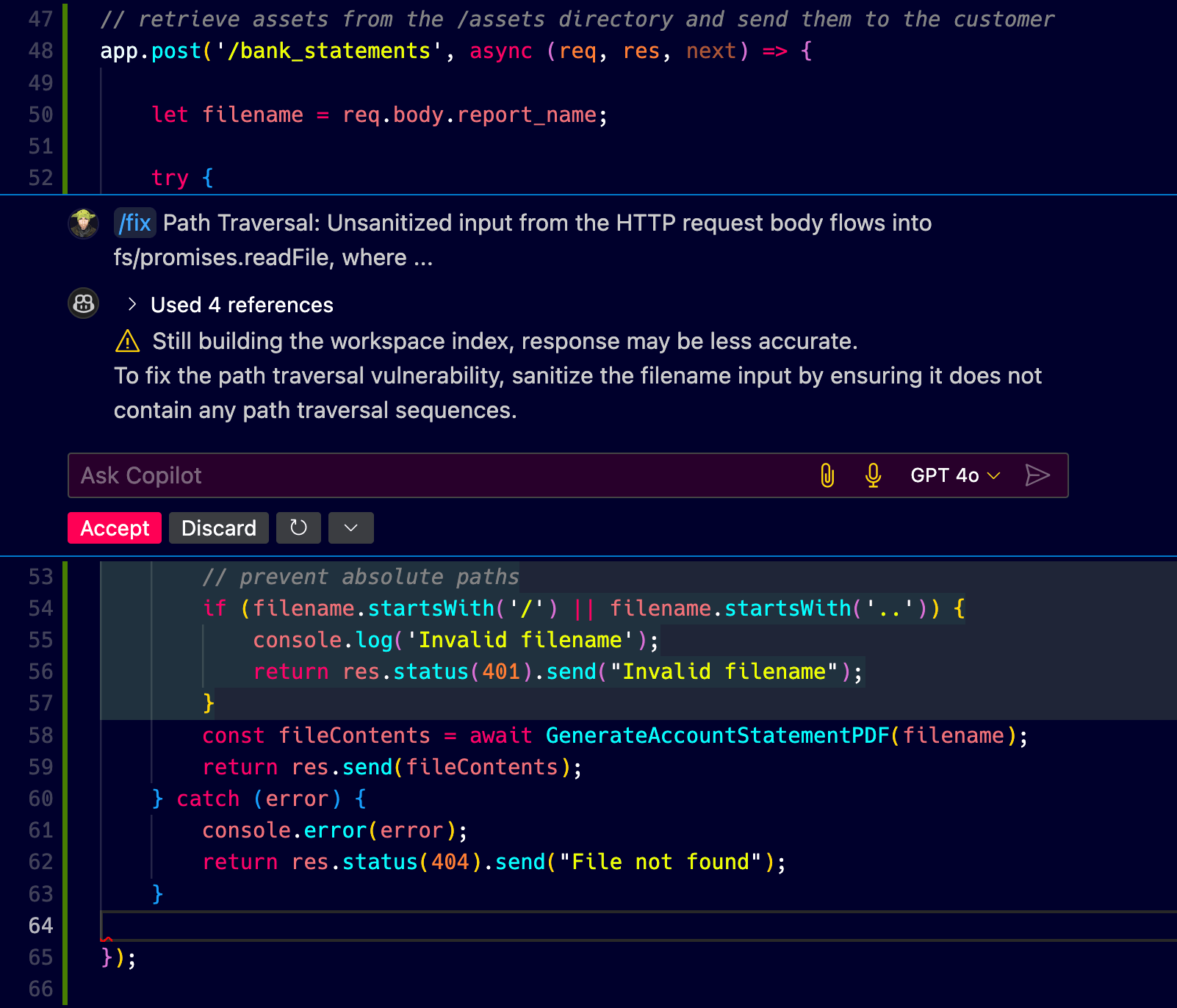

Will You Accept These GPT 4o Secure Coding Recommendations? - Using AI Code assistants powered by LLMs are a great productivity boost, but are they also free from vulnerabilities? Not really. Not even the GPT 4o model. Let me show you GPT 4o failure in practice.

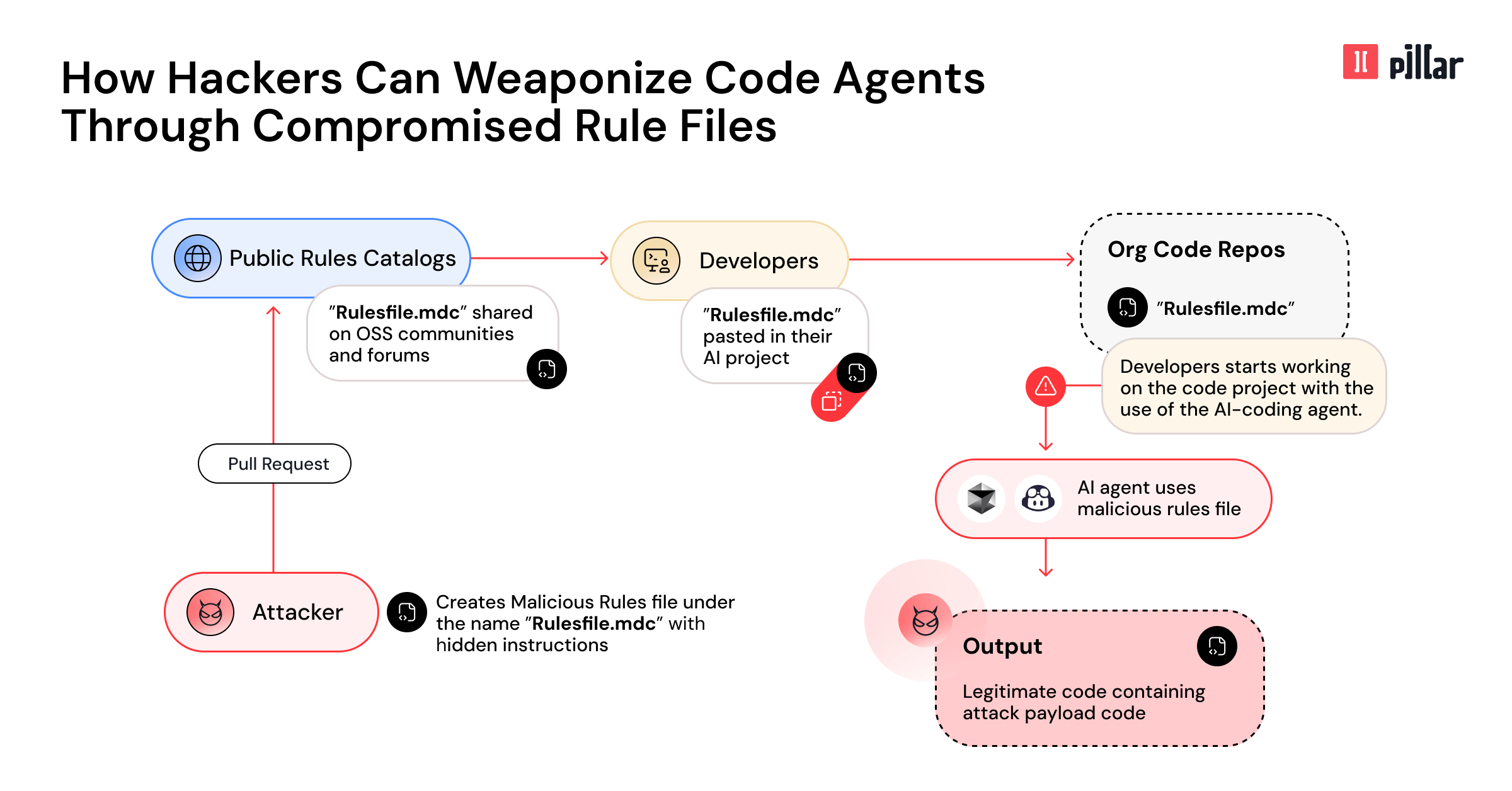

New Vulnerability in GitHub Copilot and Cursor: How Hackers Can Weaponize Code Agents - Pillar Security published an interesting take on how to utilize Trojan Source style malicious attacks using invisible characters to introduce prompt injections into Cursorrules files and GitHub Copilot code completions.

Secure Coding Conventions Never Die

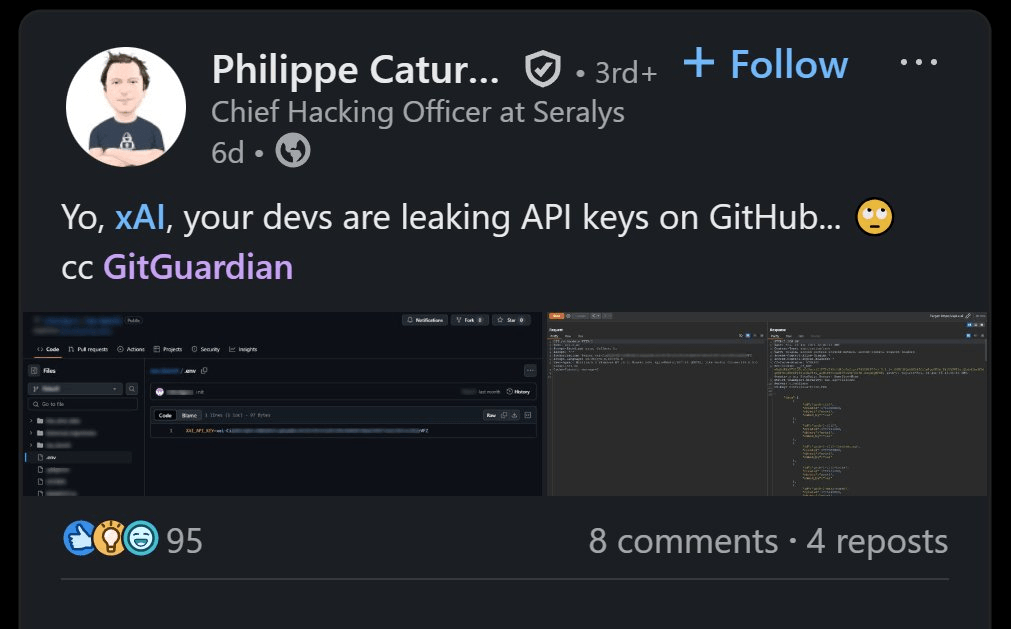

Remember my Security of Environment Variables write-up? - Well, it turns out even massive companies like XAI leak credentials like their API keys:

Vibe Coding Invites Security Risks

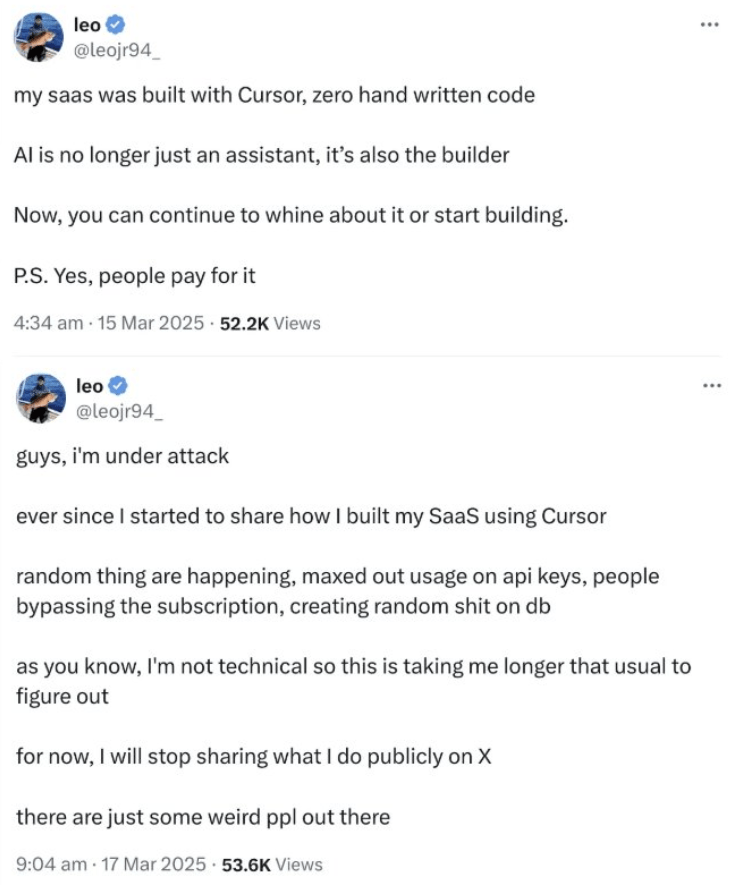

Vibe coding is fun and all but do you understand the security trade-offs vs productivity gains? Leo’s story is a tale of bad security practices from CORS, to hard-coded API keys, no rate limits and more. If you ship software and make it available on the public Internet, you’ll eventually face drive-by attackers or worse:

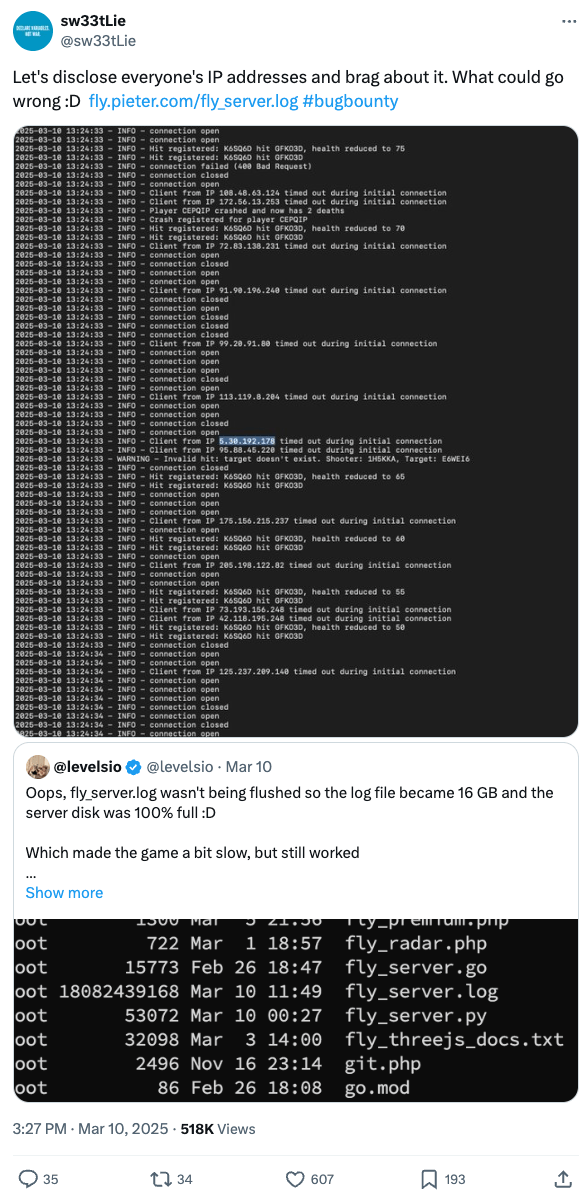

Pieter Levels, a well known indie hacker and solopreneur, ventured into vibe coding an airplanes game (which is super fun, I played it too!) but ended up with several security issues, let’s re-cap them via various tweets:

AI Security Resources

- Meta releases LlamaFirewall - The framework to detect and mitigate AI centric security risks.

📦 On GitHub

fastify/demo - A concrete example of a Fastify application using what are considered best practices by the Fastify community.

❗ New Security Vulnerabilities

- solid-js found vulnerable to CVE-2025-27109 Cross-site Scripting, 21 Feb 2025

- docgpt found vulnerable to CVE-2025-0868 Command Injection, 20 Feb 2025